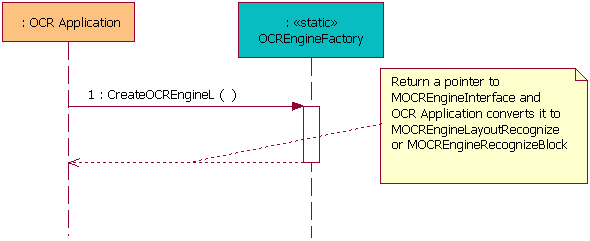

Figure 4: Initialize OCR service

To use the OCR API, an application needs to first link the OcrSrv library to itself, and then create the OCR engine instance according to the recognition type.

In general, using the OCR API always contains the following steps:

MOCREngineObserver interface.

OCREngineFactory::CreateOCREngineL method and pass

the OCREngineFactory::TEngineType information to specify

the recognition type.

OCREngineFactory::CreateOCREngineL to

either MOCREngineLayoutRecognize or MOCREngineRecognizeBlock according

to the recognition type.

OCREngineFactory::ReleaseOCREngine method.

See the following section for detailed instructions on how to use the interfaces.

To create the recognition instance, first the client application needs

to provide three parameters for OCREngineFactory::CreateOCREngineL.

They are the reference to the observer class inherited from MOCREngineObserver ,

the TOcrEngineEnv object to set the recognition thread priority

and maximum heap size, and a OCREngineFactory::TEngineType enumeration

value to specify one recognition type. After creating a recognition instance,

the client application gets the pointer of MOCREngineInterface and

needs to convert it to either MOCREngineLayoutRecognize

or MOCREngineRecognizeBlock according to the selected type.

Figure ‘Initialize OCR service’ shows how the process of the OCR API Initialization.

Figure 4: Initialize OCR service

The following code snippet demonstrates how to create a recognition instance which supports the documental layout analysis. Creating an instance that supports regional recognition is quite similar so not presented here.

The OCR service perform the recognition in a working thread, and the priority

of it shall be set through TOcrEngineEnv .

const TOcrEngineEnv env;

env.iPriority = EPriorityLess; // Set thread's priority

env.iMaxHeapSize = 1200*KMinHeapGrowBy; // Set thread's heap maximum size

//Create the OCR engine instance. Note that the "observer" is an object which instantiates the MOCREngineObserver.

MOCREngineInterface* myEngine = OCREngineFactory::CreateOCREngineL(observer,

env,

OCREngineFactory::EEngineLayoutRecognize);

// Convert the instance from MOCREngineInterface point to MOCREngineLayoutRecognize

MOCREngineLayoutRecognize* layoutEngine = static_cast<MOCREngineLayoutRecognize*>(myEngine);

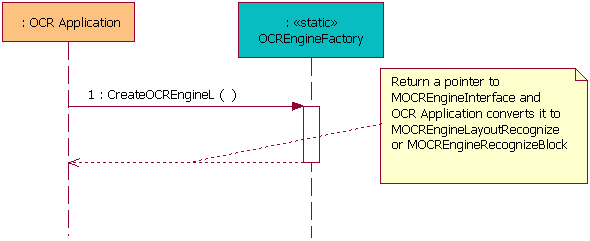

The recognition with layout analysis consists of two steps. The first step

is to analyze the entire image and get the information of the areas where

the texts are. And the second step is to recognize part or all of the areas

according to the user selection through an array of effective area indices.

The layout analysis and recognition result will be sent asynchronously from

the callback functions MOCREngineObserver::LayoutComplete and MOCREngineObserver::RecognizeComplete in

the observer class.

Figure ‘Recognize with layout ’ shows how the process of recognition with layout.

Figure 5: Recognize with layout

The following code snippet demonstrates how to use the with layout recognition

interface. After the layout analysis, MOCREngineObserver::LayoutComplete function

will be called to inform the client application about the text block information.

During the recognition process, the client application will continuously get

the progress information through the MOCREngineObserver::RecognizeProcess function.

After the recognition, recognition result will be provided through the MOCREngineObserver::RecognizeComplete function.

One or two supported languages have to be set active through MOCREngineBase::SetActiveLanguageL method

from the base interfaces. The purpose of setting two languages is to do the

recognition on an image which has both of the languages on it. For example,

there may be English words among Chinese documentation. Then the user shall

set English and Chinese as active languages.

Note that no more than two languages can be possibly mixed. And only western languages and eastern languages can be mixed together. It's not possible to set for example Chinese and Japanese both as active languages.

/** * Set active languages */ RArray<TLanguage> languages; languages.Append(ELangEnglish); // A western language languages.Append(ELangPrcChinese); // An eastern language TRAPD(err, myEngine->Base()->SetActiveLanguageL(languages)); /** * Layout analysis */ TOCRLayoutSetting layoutSettings; layoutSettings.iBrightness = TOCRLayoutSetting::ENormal; layoutSettings.iSkew = ETrue; // Set this to ETrue will trigger the geometrical adjustment _LIT(KFileName, "C:\\image.mbm"); CFbsBitmap image; image.Load(KFileName); const TInt handle = image->Handle(); // Get the handle from the font&bitmap server // Type of myEngine is MOCREngineLayoutRecognize TRAPD(err, myEngine->LayoutAnalysisL(handle, iLayoutSettings) );

The function MOCREngineBase::LayoutComplete gets called

after the layout analysis completed. Its parameter aError indicates

whether the analysis is successful or not, the aBlockCount tells

the number of text areas identified. The aBlocks is a TOCRBlockInfo array

that stores position and extent information of every identified text area.

The user can certainly select which areas need to be recognized.

RArray<TInt> blockIndex; // Block index

for (TInt i = 0; i < blockCount; i++) // The blockCount from callback parameter aBlockCount

{

// If current block count is four and you do not like to recognize No.0 and No.1 block.

if (i == 0 || i == 1)

{

continue;

}

blockIndex.Append(i);

}

// Recognize No.2 and No.3 block.

TRAPD(err, myEngine->RecognizeL(iRecogSettings, blockIndex));

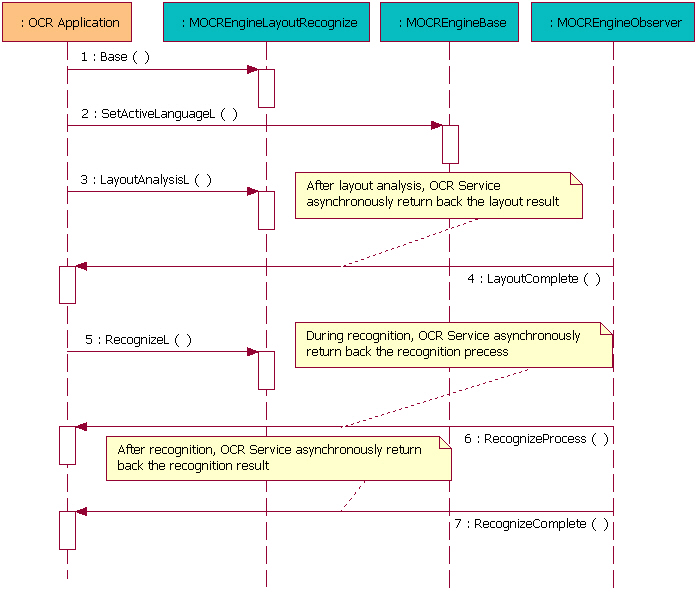

Region recognition functions are declared in MOCREngineRecognizeBlock .

To use this type of recognition, EEngineRecognizeBlock shall

be passed to OCREngineFactory::CreateOCREngineL as the type

of the OCR engine. There are two types of region recognition:

Figure ‘Recognize block’ shows the process of recognizing a specified text area.

Figure 6: Recognize block

The following code snippet demonstrates how to start a typical region recognition.

TOCRLayoutBlockInfo layoutInfo; layoutInfo.iLayout = EOcrLayoutTypeH; // Set when the text lines are horizontal layoutInfo.iText = EOcrTextMultiLine; // Set when there are more than one lines inside this area layoutInfo.iBackgroundColor = EOcrBackgroundLight; // Set when the text color is darker than the background layoutInfo.iRect.SetRect(0, 0, 100, 100); // Set the recognition area _LIT(KFileName, "C:\\image.mbm"); CFbsBitmap image; image.Load(KFileName); const TInt handle = image->Handle(); // Get the handle of the image // Type of myEngine is MOCREngineRecognizeBlock TRAPD(err, myEngine->RecognizeBlockL(handle, layoutInfo));

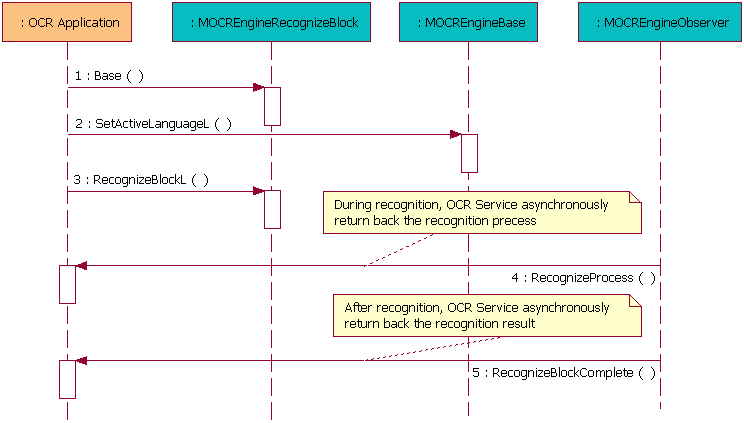

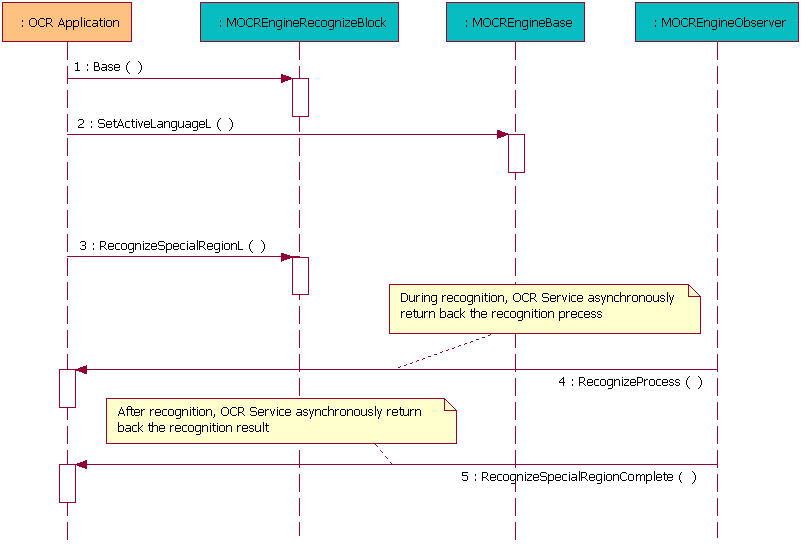

Figure ‘Recognize special region’ shows the process of special content recognition.

Figure 7: Recognize special region

The following code snippet demonstrates how to start a typical special region recognition. The user can specify the text content to be either E-mail addresses, phone numbers or web addresses.

TRegionInfo regionInfo; regionInfo.iBackgroundColor = EOcrBackgroundLight; regionInfo.iType = TRegionInfo::EEmailAddress; regionInfo.iRect.SetRect(0, 0, 100, 100); _LIT(KFileName, "C:\\image.mbm"); CFbsBitmap image; image.Load(KFileName); const TInt handle = image->Handle(); // Type of myEngine is MOCREngineRecognizeBlock TRAPD(err, myEngine->RecognizeSpecialRegionL(handle, regionInfo));

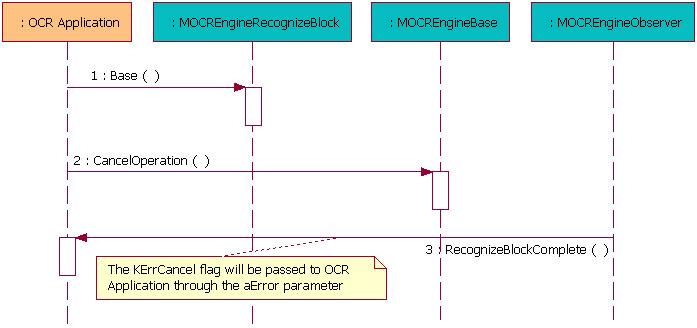

During the recognition, the client application can cancel the recognition

process. A cancel request is also handled asynchronously, observer functions

in the MOCREngineObserver will report a KErrCancel message

through their aError parameter. Both recognition with layout

analysis and the region recognition can be canceled.

Figure ‘Cancel recognition’ shows the process of canceling the recognition.

Figure 8: Cancel recognition

The following code snippet demonstrates how to issue a typical Cancel request.

myEngine->Base()->CancelOperation();

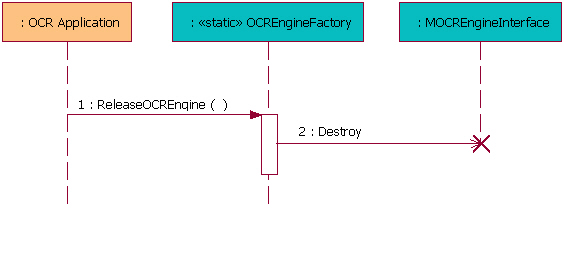

To release the OCR engine instance, you need to call OCREngineFactory::ReleaseOCREngine function.

Figure ‘Release the OCR API’ shows how to release the OCR API

Figure 9: Release the OCR API

The following code snippet demonstrates how to use the OCREngineFactory::ReleaseOCREngine the

recognizing with layout interface.

Note that there could be only one type of the recognition engine existing

at the same time. The instance shall be released before creating another instance

from the OCREngineFactory::CreateOCREngineL .

OCREngineFactory::ReleaseOCREngine(myEngine);

All exceptions are reported through Symbian OS leave mechanism.

| Exception | Description |

KErrNoMemory

|

Reported when there isn't enough memory for the layout analysis or the recognition. |

KErrServerBusy

|

Reported when a new recognition request coming while the OCR engine is busy. |

KErrAbort

|

Child thread does not exist or operation is aborted. |

KErrArgument

|

Bad parameters. |

KErrNotSupported

|

Some functionality is not supported. |

KErrGeneral

|

General system level error exceptions. |

KErrNotFound

|

No engine or database found. |

| Exception | Description |

KErrOcrBadImage

|

Bad image or unsupported image format (Only 24-bit colored or 8-bit gray scale images in bitmap format are supported). |

| Exception | Description |

KErrOcrBadRegion

|

Bad layout region. |

KErrOcrNotSetLanguage

|

Before layout or recognition, you must set one or two active languages. |

| Exception | Description |

KErrOcrBadLanguage

|

Unsupported language. |

KErrOcrBadDictFile

|

Bad database file. |

The dynamic memory consumption mostly comes from the OCR engine itself. Heap consumption now is around 900KB - 1000KB depending on the image size and language variants.

The OCR API does not explicitly support any kinds of extensions to it.